Motion Controller (2022) - Character Design meets Movement Research -

was developed during the COVID-19 pandemic, inspired by a desire to encourage whole-body physical movement in a healthy and maybe more enjoyable way. By translating body gestures into gameplay, the project offers a fun, alternative way to stay active and engaged, bringing a positive, refreshing experience during challenging times.

was developed during the COVID-19 pandemic, inspired by a desire to encourage whole-body physical movement in a healthy and maybe more enjoyable way. By translating body gestures into gameplay, the project offers a fun, alternative way to stay active and engaged, bringing a positive, refreshing experience during challenging times.

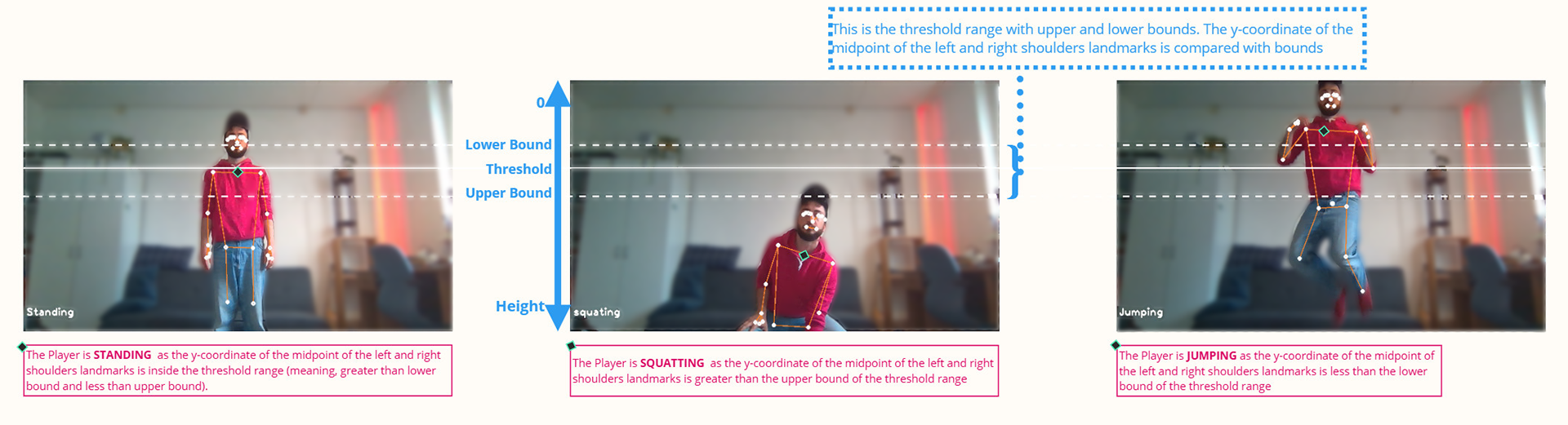

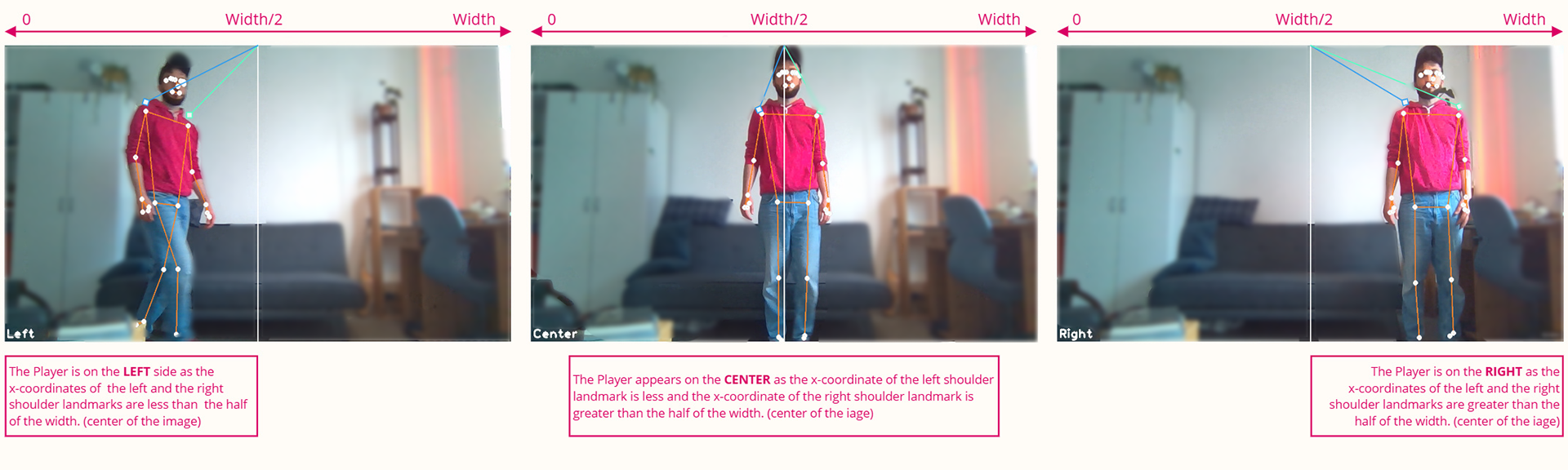

The idea is based on tracking real-time body movements and translating them into keypress actions, allowing us to play the game by controlling the character with our own full-body movements instead of pressing keys on the keyboard or using a mouse.

The project is build using MediaPipe’s AI-powered pose detection solution, to get the required information, capture and interpret the continuously changing body gestures and movements in real-time, using OpenCV and PyAutoGUI as the main/primary modules.

MediaPipe, developed by Google, is an open-source framework designed for real-time machine learning applications in streaming media. Its pose detection model, BlazePose Detector, efficiently detects 33 3D landmarks on the human body, even on low-end devices, using deep learning to identify keypoints accurately.

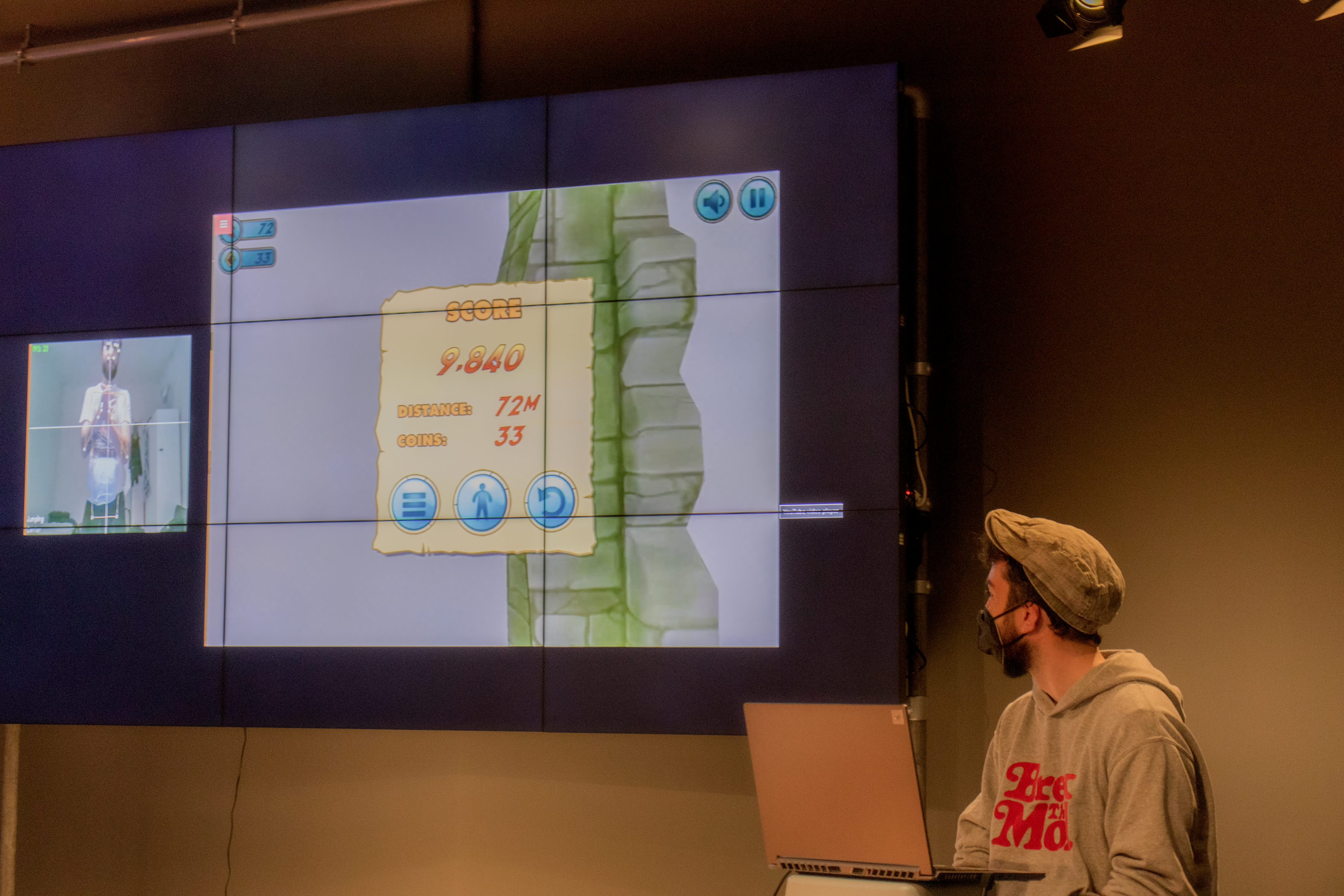

With the Motion Controller, we can play endless running games like Subway Surfers or Temple Run, which were choosen as examples due to their easy online accessibility. Just after running the Python script, a moverment is captured from the computer webcam to initialize the system. We as Player then can controll the game through body gestures and movements. The game character can move left, right, jump, and squat (also duck/slide, depending on gameplay) in sync with the player’s, our own movements aka. with the inputs of the person controlling the game.

Images and videos courtesy of DE:Hive Institute, cross:play, Game Design, HTW Berlin. © All rights reserved.